Live Link Face

com.epicgames.FaceLinkApp

View detailed information for Live Link Face — ratings, download counts, screenshots, pricing and developer details. See integrated SDKs and related technical data.

Total installs

10,000+

Rating

3.6(110 reviews)

Released

July 7, 2020

Last updated

November 12, 2025

Category

Graphics & Design

Developer

Unreal Engine

Developer details

Name

Unreal Engine

E-mail

unknown

Website

https://www.unrealengine.com

Country

unknown

Address

unknown

iOS SDKs

- No items.

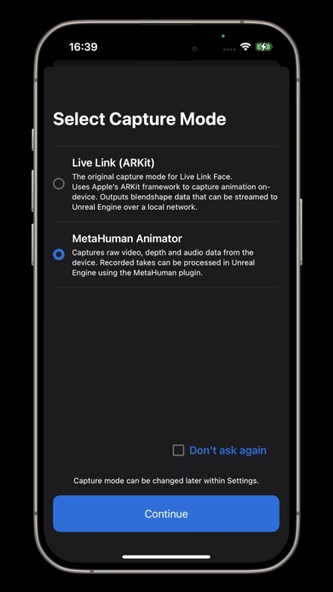

Screenshots

Description

Live Link Face for effortless facial animation in Unreal Engine—capture performances for use in Unreal Engine from your iPhone or iPad.

Capture facial performances for MetaHuman Animator:

- Live Link Face supports both real-time and processed animation.

- MetaHuman Animator uses Live Link Face to capture performances, then applies its own processing to create high-fidelity facial animation for MetaHumans.

- The Live Link Face app captures raw video and depth data, which can be transmitted directly from your device into Unreal Engine for use with the MetaHuman plugin.

- Facial animation created with MetaHuman Animator can be applied to any MetaHuman character in just a few clicks.

- This workflow requires an iPhone (12 or above) and a desktop PC running Windows 10/11.

Real-time animation for MetaHumans:

- The Live Link Face app generates animation to drive a MetaHuman Character in real time.

- Animation data is streamed to Unreal Engine over a network using the MetaHuman Live Link Plugin.

- This workflow requires an iPhone (12 or above) and a desktop PC running Windows 10/11.

Real-time animation for non-MetaHuman characters:

- Stream out ARKit animation data live to an Unreal Engine instance via Live Link over a network.

- Visualize facial expressions in real time with live rendering in Unreal Engine.

- Drive a 3D preview mesh, optionally overlaid over the video reference on the phone.

- Record the raw ARKit animation data and front-facing video reference footage.

- Tune the capture data to the individual performer and improve facial animation quality with rest pose calibration.

Timecode support for multi-device synchronization:

- Select from the system clock, an NTP server, or use a Tentacle Sync device to connect with a master clock on stage.

- Video reference is frame accurate with embedded timecode for editorial.

Control Live Link Face remotely with OSC or via the MetaHuman Plugin for Unreal Engine:

- Trigger recording remotely so actors can focus on their performances.

- Capture slate names and take numbers consistently.

- Extract data for processing and storage.

Browse and manage the library of captured takes:

- Delete takes within Live Link Face, share via AirDrop.

- Transfer directly over network when using MetaHuman Animator.

- Review captured footage on-device.